I've just found interesting post Test Driven Code Review. Its title and the 2nd sentence caught my attention:

When reviewing or just reading such code, it's often best to first read the tests.That is something I started to use some time ago and I had very good experience with it many times. But not always. As Philip Zembrod writes further he had similar experience and coined terms well-specifying tests and poorly-specifying tests. I like them. What could be the better place to understand code you've just got to review without deep understanding of requirements and domain it touches? Well, I agree with the author it should be tests. But they have to be clean, easy to read and simple. After being guilty myself in writing obscure tests in the past, I try to make them as easy and concise as possible for last couple of years. I think my turning point was when I (gradually with time) created a unit test using 13 mocks. Every time some of test (usually more at once) failed I had to go to deep analysis whether I really introduced bug or I just need to tweak one of those inconvenient mocks, because requirements changed.

I have learned a lot from that experience

- Limited responsibility of class will ask for less mocks. It is always possible to rearrange responsibilities in some way to allow simpler testing (and understanding both production and test code).

- Test should explain main points, hiding irrelevant details (like creation of data it needs, but they are not the reason for existence of this test; hiding details of how mocking library works while saying what you want to achieve).

- Test should be concise, only couple of lines all focused to tell you a story. What environment you have, what you do with tested class and what effects you should see as the result.

But not everybody does

Sad part is I always find hard to read tests that are completely obscuring the main point. Apart from plenty of "easy" to fix tests I have seen also couple of ugly ones. The worst was 3500 lines long test with 32 mocks! It was worse than really thick book with unappealing name. I've never bothered to look at production code. (Fortunately I did not need to). OK, there is even sadder part: I am afraid the author(s) will never learn anything from this test experience. Maybe only one incorrect thing - unit testing is meant to be hard :-)Back to reviews

I usually try to spread good ideas and I've found the code reviews are pretty good medium for suggesting simple improvements in tests without being caught as mentoring. Many times I found developers are too proud to accept explicit help, which is pity in my opinion. Disadvantage of this approach is high probability helped person will miss the bigger picture. But that might come later.I was helping my colleague to understand piece of code he needed to modify. Finally he was satisfied with changes and executed newly written test. Green. My task is done, let's go back to my own business. As I was leaving I got an idea. I have asked him something I usually do instead of open endorsing of TDD.

Me: Have you seen the test fail?He: No.

Me: So how do you know it works? Because it is green? Green does not tell you a test works correctly. So I grabbed the keyboard again, commented whole tested method out and executed test again. Green. I did not expect I will make my point so clearly. After some exploration we found that he forgot to replay and verify a new instance of mock. That's the thing I hate about EasyMock. To overcome this problem I usually register all mocks in a collection and always replay and verify all mocks in the collection. And I am used to do verification during tear down. I usually do that in superclass of test. I wanted to write a piece of code showing how to achieve that and went to EasyMock site to check on some details. And I have found that since version 2.5.2 it has

EasyMockSupport that does exactly that! I do not know what took them so long to add it, but I am glad it is part of the library now.

Huray!

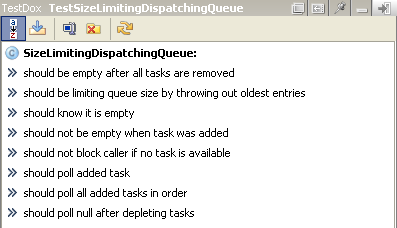

My favourite plugin for IntelliJ IDEA TestDox finally works in EAP of version 8. So I can see nicely formatted test outline again. If I needed to wait longer I would be afraid that I could stop using patterntestShould... that I like so much :-)

For those who are not familiar with this approach it as as follows. Tests should describe what tested class should do. So it helps if test methods are named accordingly - describing expected behaviour. The best naming convention is

For those who are not familiar with this approach it as as follows. Tests should describe what tested class should do. So it helps if test methods are named accordingly - describing expected behaviour. The best naming convention is testShouldDoSomething which should read as a sentence in English (of course name of tested class, or tested class in some context, makes begining of the sentence).

So for example let's have FullStackTest (specification) with method testShouldThrowExceptionAfterPush. This is time when TestDox cames into picture - it is able to convert it to Full stack should throw exception after push. Which feels really like specification of behaviour. And that's whole point. It seems subtle, but it works miracles. This plugin helps me to create good test names. It is even more important when I have lazy mood, because it motivates me to do so.

Its biggest disadvantage is that my Eclipse-based colleagues do not have anything like this (I tried to find it several times without any success, so if you know about something similar, tell me please).

Note: I have tried to update it to new IntelliJ IDEA API myself, but it was changed a lot and without any previous knowledge of IntelliJ plugin development and without complete list of changes I failed.

I mentioned readable tests yesterday and I have found following text in book Clean Code today.

What makes a clean test? Three things. Readability, readability, and readability. Readability is perhaps even more important in unit tests than it is in production code. What makes tests readable? The same thing that makes all code readable: clarity, simplicity, and density of expression. In a test you want to say a lot with as few expressions as possible.It is nice to find confirmation of my beliefs in the book I like a lot. Thinking about it I am not sure how I acquired this knowledge. Whether it was from some book or article or I just realized it after seeing a lot of bad tests (and writing them too). Indeed, it happened many times that I found much easier to understand production code and then I was not able to decipher tests to see how to modify them (or how to add new test). Or to find why test is failing. Many times it seems that people care much less about the tests. It would be interesting to know whether they do not care or do not believe they are worth of effort or they just do not know how to do them better. I suspect the last possibility is culprit most of the times. Fortunately this is the easiest to fix. It just needs learning...

My colleague wanted to add comment to recent post, asking how it is possible to achieve good quality of code. Somehow it did not work as he did not see captcha code. Anyway, I owe him an answer (I hope it was not rhetorical question).

What to do?

In my opinion it is not very hard task. To achieve good internal quality of code (easy to modify and extend, no bugs, no environment for breeding new bugs) a few "simple" things must be in use:Continuous learning

Without learning we cannot grow, but because our code base grows, new situations happen all the time and some of them cannot be handled by applying of old tricks (like for example "comments and Javadoc everywhere" that was useful in old days). If you look at your code written 5 years ago and you are satisfied with it, you are probably doing something wrong.Writing readable and expressive code

If code is not readable it takes long time to understand what is going on. If we do not understand code we need to modify problems will occur sooner or later.Continuous and merciless refactoring

Everybody should try to enhance any code they believe can be enhanced. Piece of code could be written nicely, delivering all expected behaviours in the beginning, but it will inevitably rot with time as new features are added, requirements are changed an so on. So it is important to counter attack it with refactoring. Nobody should be blamed if piece of code looks strange and contains code smells after some time. But I believe that all developers should be blamed if they allowed it to happen without trying to fix it. Continuous enhancement of existing code might look like daunting task, but it does not need to be. Any small enhancement every day will do (like renaming local variable or extraction of method). If all team members are doing it continuously they will be rewarded with great code base after some time.Good tests - unit, integration, acceptance

With "good" I do not mean covering everything, but written in way they do not stand in way of future enhancements. They need to be simple, readable and modifiable. Otherwise they will become part of legacy code we wanted to avoid by introducing them. Coverage is important too, but if you are afraid to look at test code because there is no way you could understand it quickly, you have bigger problem, that gets even bigger with time until tests stop serving their purpose and only prevent changes (enhancements) of production code.It works for me

There are more approaches, like pair programming, code reviews, working reasonable hours and so on, but I wanted to mention those that are in grasp of everybody. I said they are simple. What I mean is that there is no magic or something unachievable in them. They only need dedication, persistence and enthusiasm. There might be another virtue needed - courage. Courage to go against main stream of developers (and managers) that are afraid of changes ("If it ain't broken don't fix it" is their mantra). They are just paralysed by their fear. For example I have heard excuses like "But you don't know what class in other module could depend on this so you should not change public API." Well, it seems reasonable, but unfortunately it is good as excuse only. Many potential problems caused by refactoring will be caught by tests for module and all others by integration build. So if it really happened, that some unforeseen dependence failed to compile I will know soon enough. Compare that risk with its alternative - having that terrible code staying as is for next 2-3 years, causing unforeseen problems as other people will hack new features into it in the future. Does refactoring of code still look like bigger risk to you?Does your build system allow you to depend on tests from different modules?

Added: August 20, 2008

Recently I have created 2 tests for two classes that looked very similar. Well, they were same apart for testing different subclasses of the same parent. Indeed, tested classes were also similar. I have to fix this (on both sides if possible).

I have found this problem when I wanted to introduce new method to interface and was adding test for it. So my first idea was to reuse test implementation for both classes by creating abstract test and two subclasses handling different initialization of tested classes (different class to instantiate and also different colaborators). Problem is that these two classes together with their tests are not located in the same module. Natural place for abstract test is to put it into module that contains interface, because it is basically testing contract of that interface. Then add concrete tests into modules that implement interface. However our build system does not allow me to do that because tests are not part of built artefact, thus dependent modules do not see them. A workaround for this problem is to introduce a new module that will contain abstract test masked as production class, hence it will be visible from other modules. Of course, this new helper module will not be included in installer of product. All problems solved... Not so fast, theoretically this works perfectly, but it might not be practical if that new module would contain only 1 or 2 abstract tests. It is heavy weight solution for such amount of code. Our codebase contains tens of small modules separating different parts of product to abstract and concrete modules (which is good) and introduction of test-supporting modules might help this number to grow rapidly. Fortunatelly I have found that I can solve this particular problem differently this time, because only occured due to badly factored production classes. If I create abstract superclass for them, I can easily remove duplication of tests and add new test classes testing only logic that is different. Somehow I am getting different moral conclusion that I planned with this post - "Look for real source of problems before attempting to fix them", but that's only because my case was special. In general, it is important to "Make sure your build system allows you to depend on tests from different modules" (only tests should depend, not production code). This allows you to do better tests regarding removing duplication by factoring common code to helpers and introduction of abstract interface tests.Note about testing of intefaces

I was not able to google good example of inteface tests (Apart from that you can read about them in JUnit Recipes by J. B. Rainsberger, but that will not help you to get up to speed without having the book at hand. Still I encourage you to read it later.). So I am going to write a few lines about the idea. Let's have interface Stack.

public interface Stack {

void push(Object data);

Object pop();

boolean isEmpty();

}

This interface has defined contract. It can be specified as Javadoc or any other way, but how can you make sure that all implementations comply to it?

The best way to achieve compliance is to provide abstract test case that implementor extends and provides method for creation of tested instance. Then she can execute test and everybody knows if it is compliant or not in fraction of second.

public abstract class StackTest {

protected abstract Stack createInstance();

public void testShouldPopLastPushedItem() {

Stack tested = createInstance();

//... rest of test

}

public void testShouldThrowExceptionWhenPoppingFromEmpty() {

Stack tested = createInstance();

//... rest of test

}

}

Having these tests for contract it is quite easy to verify our cool implementation of Stack is working:

public class PersistentAndsSecureStackTest extends StackTest {

// add some setUp and tearDown if needed

protected Stack createInstance() {

return new PersistentAndsSecureStack();

}

}

Back to the point

This is only one of the reasons why my tests need to be able to depend on tests from other modules. It is simply strong tool for making sure product is well tested and all duplication is removed.I am still not able to set up dependency of tests between different modules. Fortunatelly I did not need it again.

What is the most useless piece of unit test you have seen?

Added: August 17, 2008

When I was thinking what should be my first post in my new professional blog I have remembered test method (in one undisclosed code base) I have seen a few months ago. It was test for equals(). Rather amusing one, clearly not doing what it was intended for. (I have changed it a bit to protect author and I should state that it was written years ago, thus its author does not do these things anymore).

public final void testEqualsObject() {

final SomeObject objOne = // new ...

final SomeObject objTwo = // new ...

final SomeObject tmpObj = objOne;

assertTrue("The equals() failed", objOne.equals(tmpObj));

assertFalse("The equals() failed", objOne.equals(objTwo));

}

That assertTrue can test only default implementation of Object.equals that uses ==, but nothing more. assertFalse is a bit better, but not enough for quite complicated contract equals and hashCode have.

I have fixed it, unfortunatelly not in way I would like to - by usage of EqualsTester, because this and all other implementations of equals() use

instanceof that is controversial enough to start fighting about right now.

Do me a favor, any time you need to test equals and hashCode use EqualsTester, please. You will not regret it in the long term.

What is the most useless piece of unit test you have seen? Your code done in past counts too ;-) How did you fix it?