Recent blog of Uncle Bob got me curious about the new metric he mentioned there: Change Risk Analysis and Predictions - in short - CRAP.

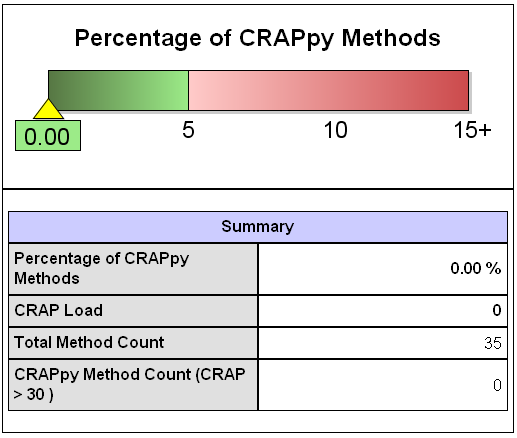

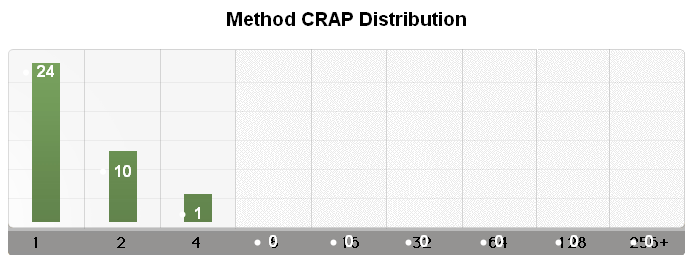

It is based on putting together cyclomatic complexity of code and its coverage by unit tests. While the increase of the first represents worsening of code base that leads to the bugs, the increase of second one helps fighting code rot by allowing refactoring and showing bugs when they occur. In theory, of course, your mileage might vary. In my opinion they are good candidates to put into one combined metrics because for example code coverage alone does not say much. Let's say we have 80% coverage. It does not say if last 20% does not need tests because it is too simple to break or just too hard to test. But when complexity raises value of metric and code coverage lowers it, then with correct threshold it can point nicely to places that deserve your attention. So I was interested to see how results of my recent work will stand this test. It took me some time to make crap4j running correctly, because there is some problem with inner classes in test classes and the only way to set up CRAP4J_HOME that worked for me was to modify ANT_OPTS. I was quite delighted by seeing 0% of CRAP in several of small modules I have tried it on. Unfortunately I did not have time to try it with older and bigger modules (not to mention results would be polluted by code of others and I wanted to see my results to give a boost to my ego ;-) ). I might do that later, but I will not publish any results here. Here are some screenshots:

In my opinion it is very nice metric, with fantastic name (it was the name that caught my attention). I can imagine it is the name that can create interesting peer pressure if applied in the team. On the other hand, I cannot imagine worse name from manager's point of view. Which manager would like to see report with such a juicy name on his desk showing him how poorly his subordinates work :-) Which manager would not be afraid to show it to his superiors/peers?

In my opinion it is very nice metric, with fantastic name (it was the name that caught my attention). I can imagine it is the name that can create interesting peer pressure if applied in the team. On the other hand, I cannot imagine worse name from manager's point of view. Which manager would like to see report with such a juicy name on his desk showing him how poorly his subordinates work :-) Which manager would not be afraid to show it to his superiors/peers?

Today I heard interesting thing. Our deadline for committing to plan for next year was moved to later, some time in the middle of June instead of being as planned. "I hope it will help us to get better estimates" we were told (this was my interpretation, the original was probably a bit different).

I was quiet as I would not be able to change that decision in any way. Still I wonder how giving us 2 or 3 weeks more will improve our ability to estimate 12 following months. Why management feels better to guess what 20 people can do in 12 months than estimating only 1-2 months, performing planned tasks and then checking where we got. But that is not the reason for this post. It reminded me important information I have found in Implementing Lean Software Development: From Concept to Cash some time ago.Goldratt believes that the key constraint of projects - he considers the product development to be a project - is created when estimates are regarded as commitments. [...] Since the estimate will be regarded as a commitment, the estimator accommodates by including a large amount of a "safety" in case things go wrong. However, even if things go well, the estimated time will be used up anyway, since estimators don't want to look like they over-estimated.and bit later in the same chapter:

In fact, if half of the activities don't take longer than their estimated time, the system will not achieve the desired improvement.This is that important bit of information - when all tasks are achieved in estimated time we cannot be sure we did not waste time. But when some of them (ideally 50%) take longer than estimated time then one of the possible reasons could be that our estimates are very close to minimal time needed, thus waste is minimized.